Introduction

Neural networks are the backbone of modern artificial intelligence, enabling machines to perform tasks that once required human intelligence. This guide will take you through the fundamental concepts of neural networks, their types, how they learn, and their practical applications. We’ll also provide implementation examples in Python.

1. Basic Components of a Neural Network

Neurons

A neuron is the basic building block of a neural network. Each neuron receives inputs, processes them, and produces an output. The processing is done using a weighted sum of the inputs, followed by an activation function.

Layers

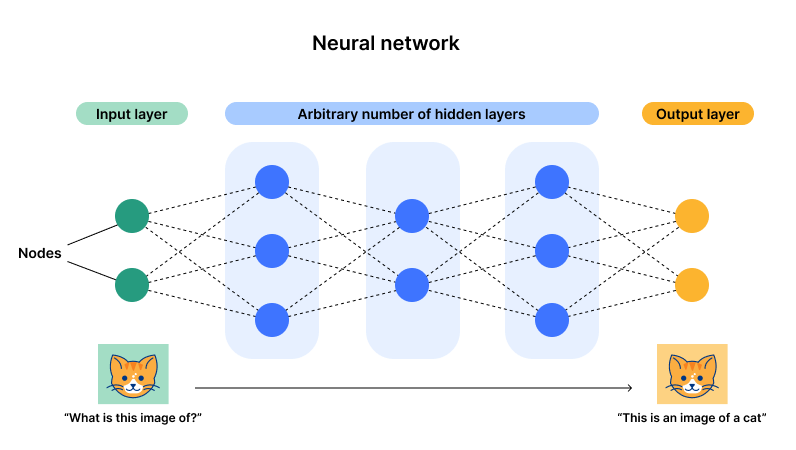

- Input Layer: This layer receives the initial data and passes it to the next layer.

- Hidden Layers: These layers perform complex computations and feature extraction.

- Output Layer: This layer produces the final output of the network.

Weights and Biases

Weights are parameters that determine the importance of each input. Biases are additional parameters that adjust the output along with the weights.

Activation Functions

Activation functions introduce non-linearity into the model, allowing it to learn complex patterns. Common activation functions include:

- Sigmoid: σ(x)=11+e−x\sigma(x) = \frac{1}{1 + e^{-x}}σ(x)=1+e−x1

- Tanh: tanh(x)=ex−e−xex+e−x\tanh(x) = \frac{e^x – e^{-x}}{e^x + e^{-x}}tanh(x)=ex+e−xex−e−x

- ReLU: ReLU(x)=max(0,x)\text{ReLU}(x) = \max(0, x)ReLU(x)=max(0,x)

2. Types of Neural Networks

3. How Neural Networks Learn

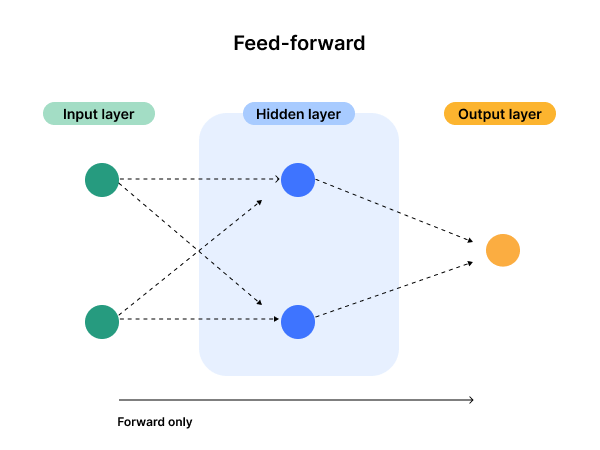

Forward Propagation

During forward propagation, the input data passes through the network layers, and the initial output is generated. We’ll use a feedforward neural network to predict house prices based on features like the number of bedrooms, square footage, and age of the house.

Loss Function

The loss function measures the difference between the predicted output and the actual output. Common loss functions include mean squared error (MSE) and categorical cross-entropy.

Backpropagation

Backpropagation adjusts the weights and biases to minimize the loss function. It calculates the gradient of the loss with respect to each weight by the chain rule, propagating the error backward.

Optimization Algorithms

Optimization algorithms like Gradient Descent are used to update the weights and biases. Variants like Stochastic Gradient Descent (SGD) and Adam are commonly used.

4. Applications of Neural Networks

Image and Speech Recognition

Neural networks are used in facial recognition systems and voice assistants, enabling devices to understand and respond to human input.

Natural Language Processing (NLP)

NLP applications include language translation, sentiment analysis, and chatbots, helping machines understand and generate human language.

Autonomous Systems

Neural networks power self-driving cars and robots, allowing them to navigate and make decisions in real-time.

Healthcare

Neural networks assist in disease prediction and medical image analysis, improving diagnostic accuracy and treatment planning.

Finance

In finance, neural networks are used for fraud detection, stock market prediction, and risk management, enhancing the decision-making process.