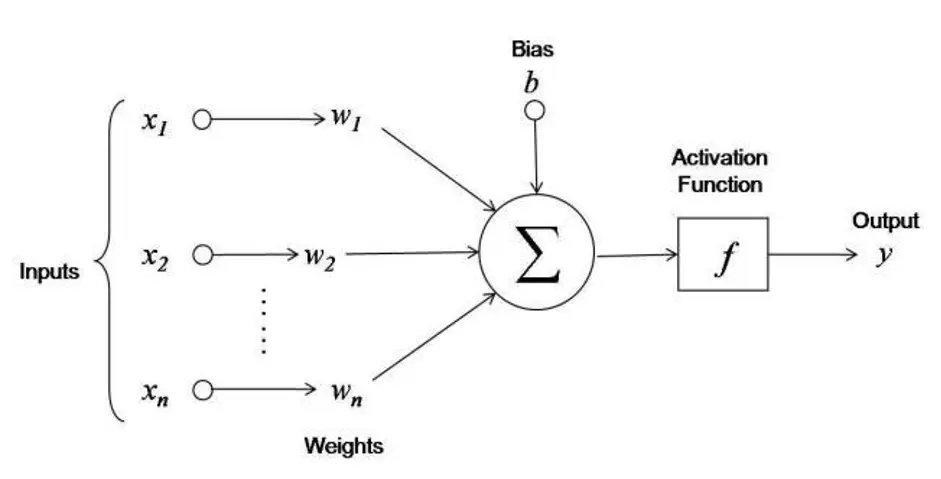

In neural networks, the activation function plays a crucial role in determining the output of a neuron. It introduces non-linearity into the network, allowing it to learn and model complex patterns in the data. Without activation functions, neural networks would essentially behave like linear models, limiting their capacity to solve complex problems.

After taking a weighted sum of the inputs plus the bias (W1X1+W2X2+…+WnXn+bW_1X_1 + W_2X_2 + \ldots + W_nX_n + bW1X1+W2X2+…+WnXn+b), we pass this value to an activation function ϕ\phiϕ, which then gives us the output of the given neuron. In this context, each XiX_iXi value is the output of a neuron from the previous layer, while WiW_iWi represents our neuron’s weights assigned to each input XiX_iXi.

Here’s an overview of some common activation functions:

- Sigmoid Activation Function

- Formula: σ(x)=11+e−x\sigma(x) = \frac{1}{1 + e^{-x}}σ(x)=1+e−x1

- Range: (0, 1)

- Use Case: Often used in the output layer of binary classification problems.

- Pros: Smooth gradient, outputs probabilities.

- Cons: Can cause vanishing gradient problems, making training deep networks difficult.

- Tanh (Hyperbolic Tangent) Activation Function

- Formula: tanh(x)=ex−e−xex+e−x\text{tanh}(x) = \frac{e^x – e^{-x}}{e^x + e^{-x}}tanh(x)=ex+e−xex−e−x

- Range: (-1, 1)

- Use Case: Generally preferred over sigmoid in hidden layers as it zero-centers the data.

- Pros: Zero-centered, better for handling negative values.

- Cons: Similar to sigmoid, it can also suffer from vanishing gradient problems.

- ReLU (Rectified Linear Unit) Activation Function

- Formula: ReLU(x)=max(0,x)\text{ReLU}(x) = \max(0, x)ReLU(x)=max(0,x)

- Range: [0, ∞)

- Use Case: Widely used in hidden layers of deep learning models.

- Pros: Computationally efficient, mitigates vanishing gradient problems.

- Cons: Can cause “dying ReLU” problem where neurons output zero for all inputs.

- Leaky ReLU Activation Function

- Formula: Leaky ReLU(x)={xif x>0αxif x≤0\text{Leaky ReLU}(x) = \begin{cases} x & \text{if } x > 0 \\ \alpha x & \text{if } x \leq 0 \end{cases}Leaky ReLU(x)={xαxif x>0if x≤0

- Range: (-∞, ∞)

- Use Case: Alternative to ReLU to address the “dying ReLU” problem.

- Pros: Allows a small gradient when the input is negative.

- Cons: Still not zero-centered.

- Softmax Activation Function

- Formula: softmax(xi)=exi∑jexj\text{softmax}(x_i) = \frac{e^{x_i}}{\sum_{j} e^{x_j}}softmax(xi)=∑jexjexi

- Range: (0, 1)

- Use Case: Used in the output layer of multi-class classification problems.

- Pros: Outputs a probability distribution.

- Cons: Can be computationally intensive for large number of classes.

Importance of Activation Functions

Activation functions enable neural networks to:

- Capture non-linear relationships: By introducing non-linearity, activation functions allow neural networks to model complex patterns in data.

- Handle complex decision boundaries: Non-linear activation functions help in creating complex decision boundaries that can better separate classes.

- Enable deep learning: Activation functions help in propagating gradients through multiple layers, enabling the training of deep networks.

Practical Examples

Example 1: Sigmoid Activation in Binary Classification

import numpy as np

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# Sample input

x = np.array([-1.0, 0.0, 1.0, 2.0])

output = sigmoid(x)

print(output)

Example 2: ReLU Activation in Hidden Layer

import numpy as np

def relu(x):

return np.maximum(0, x)

# Sample input

x = np.array([-1.0, 0.0, 1.0, 2.0])

output = relu(x)

print(output)

Activation functions are fundamental components of neural networks. They introduce the necessary non-linearity that enables neural networks to learn from complex data and make accurate predictions. Understanding and choosing the right activation function is crucial for building effective deep learning models.