Normalization:

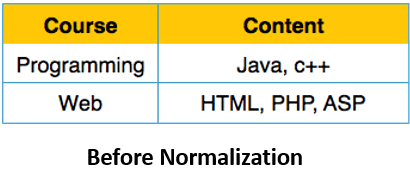

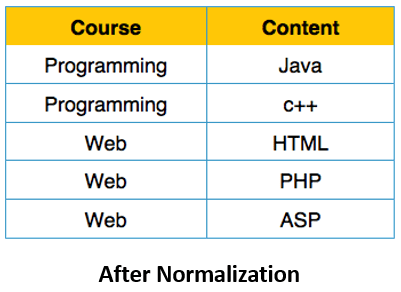

Normalization is a database design technique used to organize tables and reduce data redundancy in a relational database. The goal is to eliminate data anomalies (insert, update, and delete anomalies) and ensure that data is stored efficiently. Normalization is typically carried out through a series of normal forms (1NF, 2NF, 3NF, BCNF, 4NF, 5NF), each addressing specific issues.

Why Normalization?

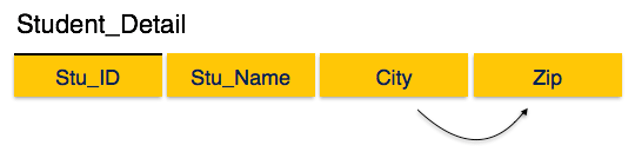

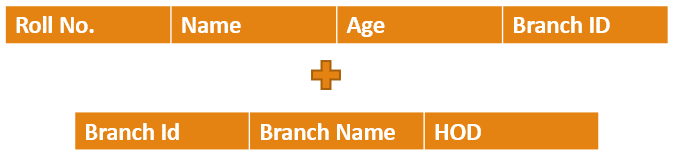

Following table has some issues which are not good for any DB, like the branch and branch id. Now Imagine if any day the Administration wish to change the Branch ID then all the records in the table needs to be changed.

| Roll No. | Name | Age | Branch | Branch ID |

| 1 | Anmol | 24 | CSE | 101 |

| 2 | Ansh | 23 | CSE | 101 |

| 3 | Akshay | 25 | CSE | 101 |

| 4 | Bhuvnesh | 26 | CSE | 102 |

Let’s understand the Anamolies in the Database First before moving to the Normalization

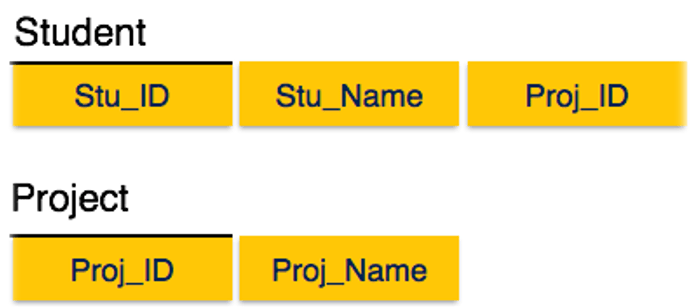

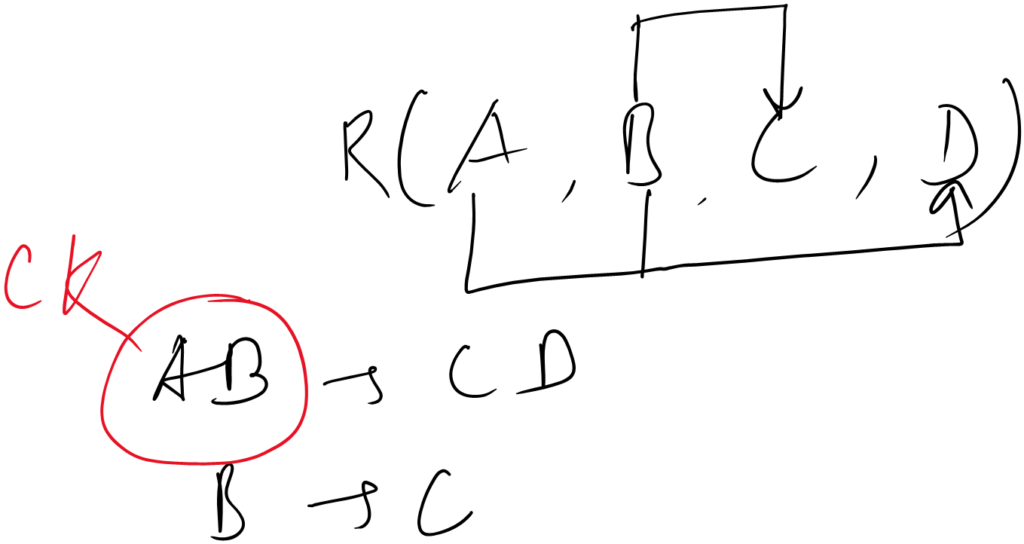

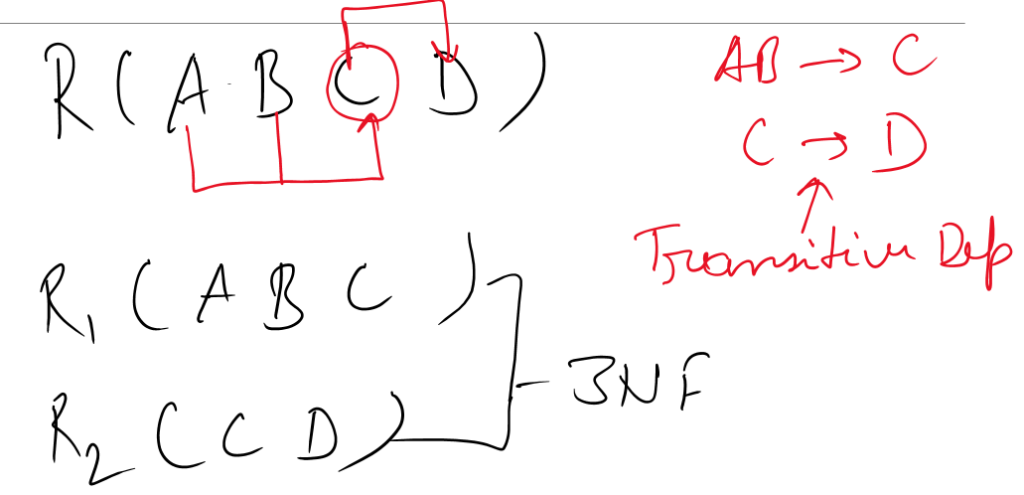

Let’s Understant all the Normal Forms

Normalized Form is below

Normal Forms

Denormalization:

Denormalization is the process of combining tables that have been normalized. While normalization reduces redundancy and improves data integrity, it can also lead to increased complexity in queries and potentially slower performance for read-heavy workloads. Denormalization aims to optimize query performance by reintroducing redundancy and reducing the number of joins needed for common queries.

Key Concepts:

- Increased Query Performance:

- Reduces the need for complex joins, leading to faster query performance.

- Useful in read-heavy scenarios, where optimizing for query speed is a priority.

- Data Redundancy:

- Reintroduces redundancy in the form of duplicated data.

- Can lead to potential data anomalies during updates, inserts, and deletes.

- Simplified Queries:

- Simplifies queries by reducing the number of tables involved.

- Useful for scenarios where the database is more read-centric than write-centric.

- Materialized Views:

- Denormalization can involve creating materialized views that store precomputed results of complex queries, further optimizing read operations.

Choosing Between Normalization and Denormalization:

- Use Normalization When:

- The database is write-intensive.

- Data integrity is of utmost importance.

- The database design needs to be flexible and adaptable.

- Use Denormalization When:

- The database is read-intensive, and query performance is a priority.

- There is a clear understanding of the read patterns and queries.

- The trade-off between redundancy and query speed is acceptable.

Conclusion:

Normalization and denormalization are complementary techniques that should be used based on the specific needs of a database. A balanced approach, often referred to as “controlled denormalization,” involves selectively denormalizing certain parts of the database to optimize for specific queries while maintaining overall data integrity. The choice between normalization and denormalization depends on the specific requirements and usage patterns of the application.